How to prepare video training set for AI application?AI applications are increadibly popular nowadays. They could solve various very complicated tasks and quite often they can do that much better than humans. This is the fact and we see that the number of such applications is growing rapidly. Let's consider the situation from inside, just to understand how it goes and what do we need to proceed with AI solutions. Here we consider only those AI-related tasks which are connected with image and video processing. We mean drone control, self-driving cars, trains, UAV and much more.

To solve such a task, we need to have a lot of data for further inference. This is very important starting point. We do need lots of high quality data for a particular set of situations. Where and how we can get that data? This is a huge problem. Sure, we can use any standard training set to ensure machine learning, but that set could hardly correspond to the real situation that we need to check and to control. We can train our neural network on such a data, but we can't be confident that on real data the system will behave correctly. Now we can formulate the task to be solved: how to collect appropriate video data to train neural network? The answer is not really difficult: if we are talking about video applications, then we need to record video data in proper situations. For example, for self-driving car we need to install necessary amount of cameras on the car and to get lots of recordings. At that point we can see that our training set will depend on particular camera model and image processing algorithms which are utilized in that camera application. Such a camera system generates some artifacts and our neural network will be trained on such a data. If we succeed, then in real situation our AI application will work with camera and software which are alike, so real data will remind those from the training data set. These items from typical camera system are important

Apart from that, we need also to take into account the following

This is not just one task - this is much more, but such an approach could help us to build robast and reliable solution. We need to choose a camera and software before we do any network training. To summarize, to collect data for neural network training for video applications, we need to have a camera system together with processing software which will be utilized later on in real case. What we can offer to solve the task?

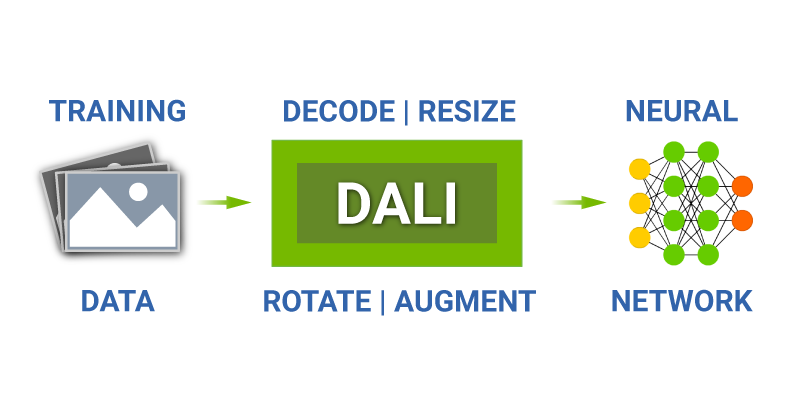

How we train neural network?If we have a look at NVIDIA DALI project, we can see that as a starting point we utilize standard image database, decode jpeg images and then we apply several image processing transforms to train the network on changed images which could be derived from the original set via the following operations:

It could be the way to significantly increase the number of images in the database for training. This is actually a virtual increase, though images are not the same and such an approach turns out to be useful. Actually, we can do something alike for video as well. We suggest to shoot video in RAW and then to choose different sets of parameters for GPU-based RAW processing to get a lot of new image series which are originated from just one RAW video. As soon as we can get much better image quality if we start processing from RAW, we can prepare lots of different videos for neural network training. GPU-based RAW processing takes minimum time, so it should not be a bottleneck. These are transforms which could be applied to RAW video

This is the approach to simulate in the software different lighting conditions in terms of exposure control and spectral characteristics of illumination. We can also simulate various lens and orientations, so the total number of new videos for training could be huge. There is no need to save these processed videos, we can generate them on-the-fly by doing realtime RAW processing on GPU. This is the task we have already solved at Fastvideo SDK and now we could utilize it for neural network training. |