JPEG XS – modern visually-lossless low-latency lightweight video coding solutionAuthors: Fyodor Serzhenko and Anton Boyarchenkov JPEG XS is a recent image and video coding system developed by the Joint Photographic Experts Group and published as international standard ISO/IEC 21122 in 2019 [1] (the second edition in 2022 [2]). Unlike many former standards developed by the JPEG committee, JPEG XS addresses video compression. What makes it stand out from the rest video compression techniques are different priorities. Improving coding efficiency was the highest priority of previous approaches, while latency and complexity have been, at best, only secondary goals. That’s why the uncompressed video streams have still been used for transmission and storage. But now JPEG XS have emerged as a viable alternative to the uncompressed form.

Background of JPEG XSThere is a continual fight between the benefits of uncompressed video, and very high bandwidth delivery requirement. Network bandwidth continues to increase, but so does resolution and complexity of video. With the emergence of such formats as Ultra-High Definition (4K, 8K), High Dynamic Range, High Frame Rate, panoramic (360), both the storage and bandwidth requirements are rapidly increasing [3] [4]. Instead of costly upgrade or replacement of deployed infrastructure we can consider using transparent compression to reduce video stream sizes of these demanding video formats. Surely, such compression should be visually lossless, low latency and low complexity. However, the existing codecs (see short review in section 3) were not able to satisfy all the requirements simultaneously, because they were mostly designed with the coding efficiency as the main goal. But solely improving the coding efficiency is not the only motivation for video compression. A lightweight compression scheme can achieve energy savings, when energy required for transmission is greater than energy cost of compression. In addition, the delay could even be reduced if compression overhead is less than the difference of transmission time of uncompressed and compressed frames. For non-interactive video systems, such as video playback, the latency is not important as long as the decoder provides the required frame rate. On the contrary, interactive video applications require low latency to be useful. When network latency is low enough, the video processing pipeline can become the bottleneck. The latency is even more important in the case of fast-moving and safety-critical applications. Besides, sufficiently low delay will open up space for new applications, such as cloud gaming, extended reality (XR), or internet of skills. [5] Use casesThe most common example of uncompressed video transport is through standard video links such as SDI and HDMI, or through Ethernet. In particular, massively deployed 3G-SDI was introduced with SMPTE ST 2018 standard in 2006 and have throughput of 2.65 Gbps, which is enough for video stream in 1080p60 format. Video compression with ratio 4:1 would allow sending 4K/60p/422/10 bits video (requiring 10.8 Gbps throughput) through 3G-SDI. 10G Ethernet (SMPTE2022 6) have throughput of 7.96 Gbps, while video compression with ratio 5:1 would allow sending two video streams with 4K/60p/444/12 bits format (requiring 37.9 Gbps) through it [3] [4] [6]. Embedded devices such as cameras use internal storage, which have limited access rates (4 Gbps for SSD drives, 400–720 Mbps for SD cards). Lightweight compression would allow real-time storage of video streams with higher throughput. Omnidirectional video capture system with multiple cameras covering different field of view, transfer video streams to a front-end processing system. Applying lightweight compression to these streams will reduce both the required storage size and throughput demands [3] [4] [6]. Head mounted displays (HMDs) are used for viewing omnidirectional VR and AR content. Given the computational (and power) constraints of such a display, it can not be expected to receive omnidirectional stream and locally process it. Instead, the external source should send to HMD that portion of the media stream which is within the viewer’s field of view. An immersive experience also requires very high-resolution video, and the quality of experience is crucially tied to the latency [3] [4] [6]. Other target use cases include broadcasting and live production, frame buffer compression (inside video processing devices), industrial vision, ultra high frame rate cameras, medical imaging, automotive infotainment, video surveillance and security, low-cost visual sensors in Internet of Things, etc. [6] Emerging of the new standardAddressing this challenge, several initiatives have been started. Among them is JPEG XS, launched by the JPEG committee in July 2015 with Call of Proposals issued in March–June 2016 [6]. The evaluation process was structured into three activities: objective evaluations, subjective evaluations, and compliance analysis in terms of latency and complexity requirements. Based on the above-described use cases the following requirements were identified.

It is easy to see that none of the existing standards comply with the above requirements. JPEG and JPEG-XT make a precise rate control difficult and show a latency of one frame. With regard to latency, JPEG 2000 versatility allows configurations with end-to-end latency around 256 lines or even as small as 41 lines in hardware implementations [4], but it still requires many hardware resources. VC-2 is of low complexity, but only delivers limited image quality. ProRes makes a low latency implementation impossible and makes fast CPU implementations challenging. Out of 6 proposed technologies one was disqualified due to latency and complexity compliance issues, and two proponents were selected for the next step of standardization process. It was decided that JPEG XS coding system will be based on the merge of those two proponents. This new compression algorithm provides a precise rate control with a latency below 32 lines and fits in a low-cost FPGA. At the same time its fine-grained parallelism allows optimal implementation on different platforms, while the compression quality is superior to VC-2. JPEG XS algorithm overviewThe JPEG XS coding system is a classical wavelet-based still image codec (see more detailed description in [4] or in the standard [1] [2]). It uses reversible color transformation and reversible discrete wavelet transformation (Le Gall 5/3), which are known from JPEG 2000. But here DWT is asymmetrical: the specification allows up to two vertical decomposition levels and up to eight horizontal levels. This restriction on number of vertical levels ensures that the end-to-end latency does not exceed maximum allowed value of 32 screen lines. In fact, algorithmic encoder-decoder latency due to DWT alone is 3 or 9 lines for one or two vertical decomposition levels, so there is a latency reserve for any form of rate allocation not specified in the standard. The wavelet stage is followed by a pre-quantizer which chops off the eight out of 20 least significant bit planes. It is not used for rate-control but ensures that the following data path is 16 bits wide. After that the actual quantization is performed. Unlike JPEG 2000 with a dead-zone quantizer, a data-dependent uniform quantizer can be optionally used. The quantizer is controlled by the rate allocator, which guarantees compression to an externally given target bit rate, which is strict in many use cases. In order to respect target bit rate together with maximum latency of the 32 lines, JPEG XS divides image into rectangular precincts. While in JPEG 2000 precincts are typically quadratic regions, a precinct in JPEG XS spans across one or two lines of wavelet coefficients for each band. Due to latency constraints the rate allocator is not precise, but rather heuristic algorithm without actual distortion measurement. Moreover, the specific way of rate allocator operating is not defined in the standard, so different algorithms can be considered. Algorithm is ideal for low-cost FPGA where access to external memory should be avoided, and it can be suboptimal for high-end GPU. The next stage after rate allocation is entropy coding, which is relatively simple. The quantized wavelet coefficients are combined in coding groups of four coefficients. For each group, the three datasets are formed: bit-plane counts, quantized values themselves and the signs of all nonzero coefficients. From these datasets only bit-plane counts are entropy coded, because they require a major part of the overall rate. The rate allocator is free to select between four regular prediction modes per wavelet band – prediction on/off, significance coding on/off. Besides, it can select between two significance coding methods, which specifies whether zero predictions or zero counts are coded. “Raw fallback mode” allows disabling bit-plane coding and should be used when the regular coding modes are redundant. A smoothing buffer ensuring a constant bit rate at the encoder output even if some regions of the input image are easier to compress. This buffer can have different size according to the selected profile. This choice affects the rate control algorithm, which uses it to smooth out rate variations. JPEG XS profilesParticular applications may have additional constraints on the codec, such as even lower complexity or buffer size limitation. So, the standard defines several profiles to allow different levels of latency and complexity. In fact, the entire part 2 of the standard (ISO/IEC 21122-2 “Profiles and Buffer Models” [7]) is devoted to specification of profiles, levels and sublevels [4]. Each profile allows to estimate the necessary number of logic elements, the memory footprint, and whether chroma subsampling or an alpha channel is required. They are structured along the maximum bit depth, the quantizer type, the smoothing buffer size, and the number of vertical DWT levels. Other coding tools such as choice of embedded/separate sign coding or insignificant coding groups method insignificantly increase decoder complexity, so they are not restricted by the profile. As such, the standard defines eight profiles, whose characteristics are summarized in Table 1. The three “Main” profiles target all types of content (natural, CGI, screen) for Broadcast, Pro-AV, Frame Buffers, Display links use cases. The two “High” profiles allow for second vertical decomposition and target all types of content for high-end devices, cinema remote production. The two “Light” profiles are considered suitable for natural content only and target Broadcast, industrial cameras, in-camera compression use cases. Finally, the “Light-subline” with minimal latency (due to zero vertical decomposition and the shortest smoothing buffer) is also suitable for natural content only and target cost-sensitive applications. Profiles determine the set of coding features, while levels and sublevels limit the buffer sizes. In particular, levels restrict it in the uncompressed image domain and sublevels in the compressed domain. Similar to HEVC levels, JPEG XS levels constrain the frame dimensions and the refresh ratio (e.g., 1920p/60). Table 1. Configuration of JPEG XS Profiles [4].

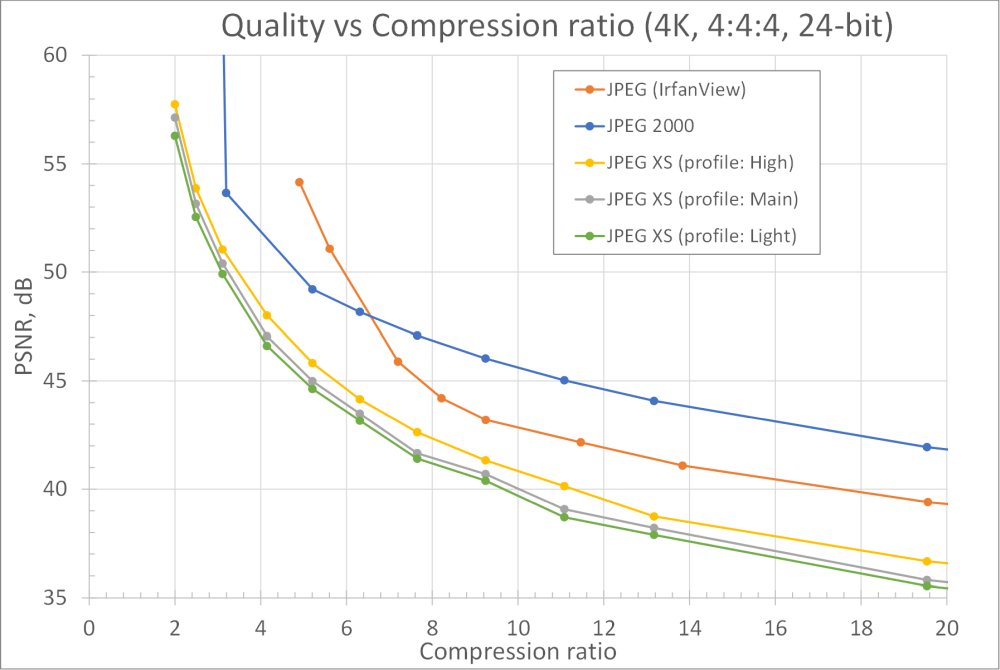

Performance evaluationThis section shows experimental results on rate-distortion comparison against other compression technologies with PSNR as the distortion measure. We are focused on RGB 4:4:4 24-bit natural content here, as it was shown that results for subsampled images and images with higher bit depth are similar. Figure 1. Compression ratio dependence of image quality measured by PSNR for different codecs and profiles.

On Figure 1 we've compared rate-distortion dependencies for JPEG XS and two classical image codecs: JPEG and JPEG 2000. The testing procedure was as follows. Our test image 4k_wild.ppm (3840 × 2160 × 24bpp) with natural content was compressed multiple times with several compression ratios in the range from 2:1 to 20:1. These ratios are equal for JPEG XS and JPEG 2000, which allows the direct comparison. But ratios are different for JPEG, because it has no precise rate control functionality. The highest point of JPEG 2000 curve (with infinite PSNR) shows compression ratio of reversible algorithm. The test image is visually lossless for all cases when PSNR is higher than 40 dB. As we can see on the figure, among the three image codecs, JPEG 2000 shows the highest quality (visually lossless even for ratio 30:1 with this image), but it comes with much greater computational complexity. The quality of the classical JPEG images is even higher for ratios 6:1 or less (and visually lossless for ratio 14:1), and it has low complexity, but the lack of precise rate control can be critical in some applications, and the minimum latency is one frame. That’s why it cannot substitute uncompressed video and JPEG XS. Although JPEG XS curves lay below curves of other two codecs, the image quality is still high enough to be visually lossless when the ratio is below 10:1. The average PSNR difference is 5.4 dB between JPEG 2000 and the “high” profile JPEG XS, and 4.5 dB between JPEG 2000 and the “main” profile JPEG XS (for compression ratios up to 10:1). The average difference is 0.75 dB between the “main” and “high” profiles and only 0.45 dB between the “main” and “light” profiles. Patents and RANDPlease bear in mind that JPEG XS contains patented technology which is made available for licensing via the JPEG XS Patent Portfolio License (JPEG XS PPL). This license pool covers essential patents owned by Licensors for implementing the ISO/IEC 21122 JPEG XS video coding standard and is available under RAND terms. You can find more info at https://www.jpegxspool.com We've implemented the high-performance JPEG XS decoder on GPU as an accelerated solution for JPEG XS project from iso.org (Part 5 of the international standard ISO/IEC 21122-5:2020 [8]) which is done for CPU and show performance way below real-time processing. That was done to show the potential for GPU-based speedup for such a software. We can offer our customers high performance JPEG XS decoder for NVIDIA GPUs, though all questions concerning licensing of JPEG XS technology the customer must settle with JPEG XS patents owners. References

|