|

|

Multi Camera Systems with GPU Image Processing

Multi-camera system can do the job which is impossible for a single camera. This is the way to get bigger field of view, higher resolution, better data rate, different spectral sensitivity, etc. Nowadays multi-camera systems are utilized in many industries for various applications. Such a system is able to provide much more information in comparison with a single camera. Nevertheless, multicamera systems are quite complicated to handle, and we need to take into account quite a lot to make them working properly and at full speed.

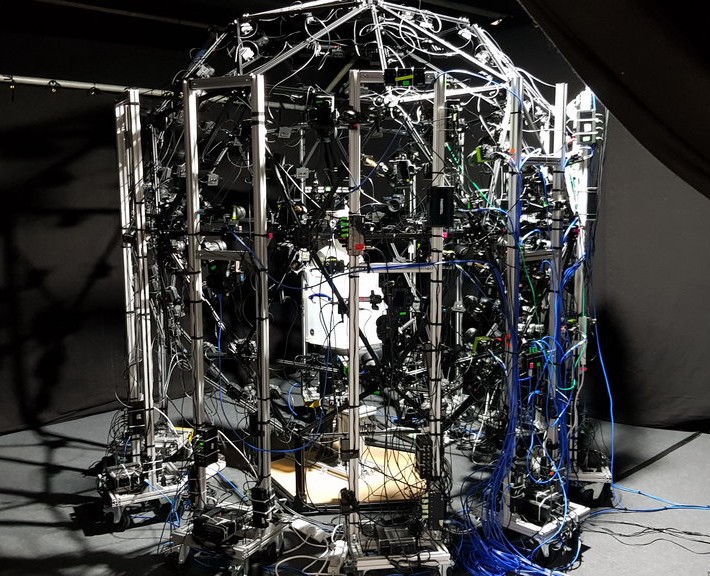

Fig.1. Full view of multi-camera rig from Infinite Realities company

Applications for multi-camera systems

- VR and Digital Cinema: Creation of 3D models is usually done via multicamera systems. 3D computer-animated movies is a current trend in digital cinema. The art of digital visual effects has matured to the point where it’s possible to recreate almost anything. Precision of 3D model and character animation depends on the number of cameras, their resolution, bit depth, etc. 3D modelling software relies on image series from modern multiple camera systems.

- Medical imaging: Various imaging systems such as endoscopes, microscopes, X-ray devices or operating room monitoring cameras are used in medical applications. A major challenge is to control all these video sources in the clinical environment, to provide the most diverse cable concepts, operate the cameras, transport, transform, display, document and archive images and video.

- Mapping: there is a task of multi-camera tracking and mapping with non-overlapping fields of view (real-time pose estimation systems). Multicam camera cluster could be constructed by using multiple perspective cameras mounted on a multirotor aerial vehicle and augmented with tracking markers to collect high-precision ground-truth motion measurements from an optical indoor positioning system.

- Video surveillance task includes multi-camera calibration, computing the topology of camera networks, multi-camera tracking, object re-identification, multi-camera activity analysis and cooperative video surveillance both with active and static cameras. With the development of surveillance multi-camera systems, the scales and complexities of camera networks are increasing and the monitored environments are becoming much more complicated.

- Motion tracking: multi-target tracking (MTT) and multi-object tracking (MOT) are active and challenging research topics to increase the robustness of tracking. Typical approaches for multicamera tracking assume overlapping cameras observing the same 3D scene, exploiting several real-world constraints like a common geometry. Most multiple camera approaches for multiple object tracking rely on background subtraction and project all foreground pixels onto a common ground plane.

- Sport: high-end multi-camera broadcasting (live multi-cam streaming) for sport competitions is a global trend. Apart from that, realtime sports video analytics, replay and in-depth event sports coverage could be much more effective with multicamera systems.

- 3D scanning and photogrammetry: modern state-of-the-art photogrammetric 3D/4D scanner systems are based on multi-camera technology. Such multicamera rigs enable rapid capturing of 2D images for creating 3D models of still or moving objects, animals and humans.

- Robotics, UAV, self-driving units need precise info about their own position and positions of other surrounding objects which could be measured with multiple camera systems. Multi-camera system could cover full 360-degree field-of-view around the car to avoid blind spots which can otherwise lead to accidents. Standard image processing pipeline for 3D mapping, visual localization, obstacle detection, need to be changed to take full advantage of the availability of multiple cameras instead of treating each camera individually.

- Machine vision and industrial cameras are utilized for sofisticated control in production. Such control and automation tasks just can't be solved with one camera, so multi-camera systems are necessary.

- High speed imaging also benefits from multiple camera systems. Recordings from different angles at the same time offer much more information for scientific research, testing, diagnostics.

Architecture of multi-camera systems

To build a multi-camera system, we need to take into account many aspects of future imaging solution. We could briefly describe the main ideas which are really important to create viable multicamera system.

Cameras and accessories for multi-camera system

- Image sensors: mono/color, NIR, spectral sensitivity, dimensions, pixel size, bit depth, frame rate, resolution, ROI, etc.

- Additional sensors: lidar, GPS

- Illumination: laser, strobe, synchro, structured/modulated, wavelength-specific

- Trigger: master, master-slave, jitter

- Optics: mount, quality, focal lengths, filters, remote control, etc.

- Other constraints: size, weight, heat, temperature, power consumption

Hardware-related issues

- GPU model, achitecture, memory size

- PCIe switch: upstream PCIe x8 Gen3, downstream PCIe or USB, trigger & power

- Frame grabber if necessary

- Availability of interfaces: PCIe, USB3, GigE / Gig10E, CameraLink, Coax, etc.

- Cable connections: Flat ribbon, iPass, FireFly, Coax

- Cable length, ability for cable replication

- Power: external power supply, battery

Software for multi-camera systems

- OS: Windows/Linux/ARM

- Multi-camera control software

- Realtime data acquisition and recording

- Image sensor control

- Image processing pipeline for each camera

- Realtime or offline image processing on CPU or GPU

- Output compression

- Storage or streaming

- Post processing

Multi camera system configurations

- Inside In (180/360 panorama systems)

- Inside Out (3D Dome)

- Camera array

- Cluster of cameras (set of cameras at one box/body)

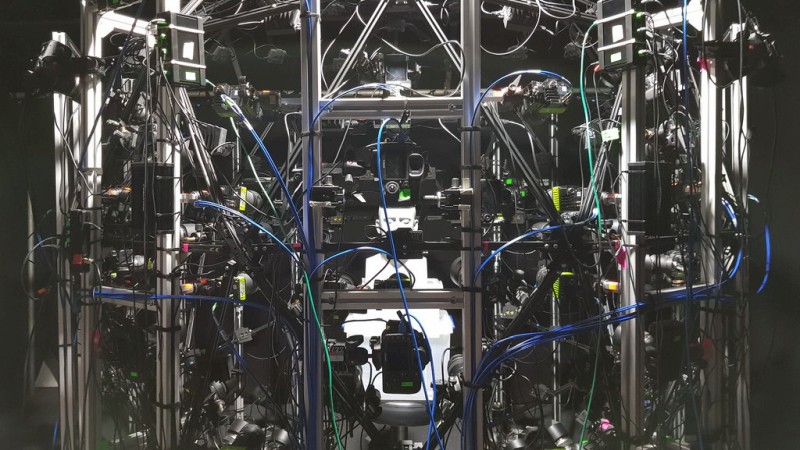

Fig.2. 3D scanning multi-camera system from Infinite Realities company

Input calibration parameters for each camera

- Dark frame

- Flat-Field frame for FFC

- Digital Camera Profile (DCP)

- Lens Correction Profile (LCP)

- Camera intrinsic parameters: principal point, aspect ratio, focal length, distortion center and coefficients

- Camera extrincis parameters: translation, rotation, homography

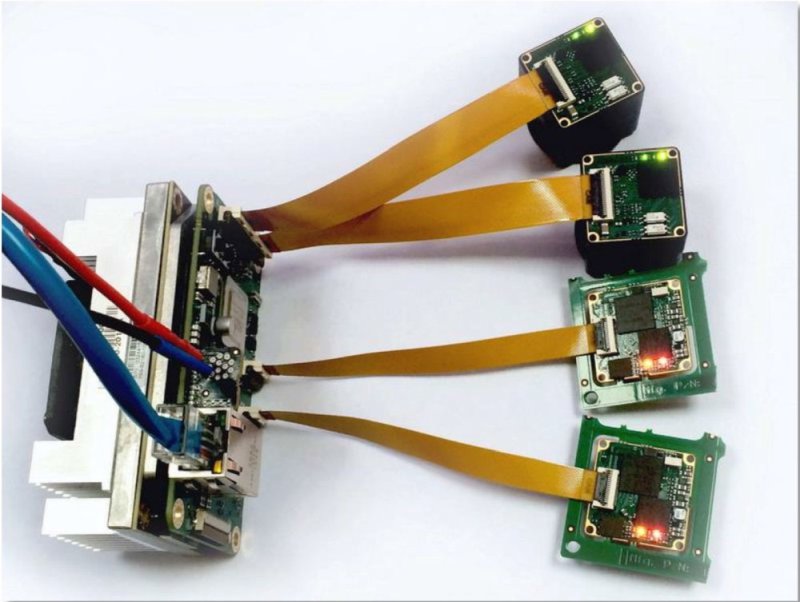

Fig.3. Embedded multi camera system on NVIDIA Jetson from XIMEA company

Image processing pipeline for multi-camera system usually is essentially the same as for a single camera. The difference comes from synchronization, relative positions, individual camera parameters and from high data rate. The most difficult problem is high data rate. When the amount of frames per second is increased in many times, we just can't scale CPU performance accordingly at the same hardware, as soon as even for a single camera we have quite complicated multithreaded software for image processing on CPU. Further scaling is not possible in many cases, so we need to implement another approach.

Now we can see that performance boost with GPU for image processing in camera applications could be a good solution to solve the matter. We do have excellent benchmarks for GPU-based image processing, and this is the way to scale the performance for multi-camera system. On GPU we can process raw streams from multiple cameras in realtime. We just need to supply individual calibration data for each frame to let GPU know corresponding parameters for each camera.

We've implemented quite a lot of such solutions for different camera applications. Our experience helps our customers with their projects. We have GPU-based Image & Video Processing SDK which is capable to solve such multicamera tasks.

Fig.4. Fastvideo SDK to process raw images on GPU for multi-camera systems

Pipeline example for multicam system on GPU

- RAW Image acquisition data import to GPU memory

- Dark frame subtraction

- Flat-Field Correction

- White Balance

- RAW 1D LUT (composite or per-channel)

- Debayer

- Denoiser (wavelet, bilateral, NLM)

- Color correction with matrix profile

- Exposure correction

- Curves and levels for RGB/HSV

- Projection together with undistortion

- Crop and Resize

- Sharpening

- 3D LUT (RGB/HSV)

- Gamma with output color space conversion

- Image Compression to JPEG, JPEG2000, TIFF, EXR

- Video Compression to MJPEG, MJPEG2000, H.264, H.265, AV1

- Data storage or streaming via RTSP

Please send us your request concerning your anticipated image processing pipeline for your multi-camera system. To evaluate the quality and the performance of our solution, please download Fast CinemaDNG Processor software for Windows. At the same link you can download DNG image series for testing as well.

We would also recommend testing our FastVCR camera application which is ready to test with XIMEA machine vision cameras USB3 and PCIe. We've implemented the above image processing pipeline and it's capable of working at real time. This is essentially important for camera applications to work at high data rates and without frame drops. On a good GPU it's possible to achieve processing performance in the range of 2-4 GPix/s or even more. For a simplified pipeline one could get up to 10 GPix/s for RAW to RGB conversion.

Fig.5. Full image processing pipeline on NVIDIA CUDA for each camera in multi-camera system

The above image processing pipeline for NVIDIA GPUs is capable to solve standard task of RAW transform to RGB (compressed or uncompressed) for off-line and realtime applications. We've utilized it for different multi-camera systems and in all cases we've got very good results. GPU is a powerful means to do realtime image processing for multicamera systems and we suggest to try our SDK for these applications.

|