Web Resize on-the-fly: one thousand images per second on Tesla V100 GPUFastvideo company has been developing GPU-based image processing SDK since 2011, and we also got some outstanding results for software performance on NVIDIA GPU (mobile, laptop, desktop, server). We’ve implemented the first JPEG codec on CUDA, which is still the fastest solution on the market. Apart from JPEG, we’ve also released JPEG2000 codec on GPU and SDK with high performance image processing algorithms on CUDA. Our SDK offers just exceptional speed for many imaging applications, especially in situations when CPU-based solutions are unable to offer either sufficient performance or latency. Now we would like to introduce our Resize image on the fly solution.

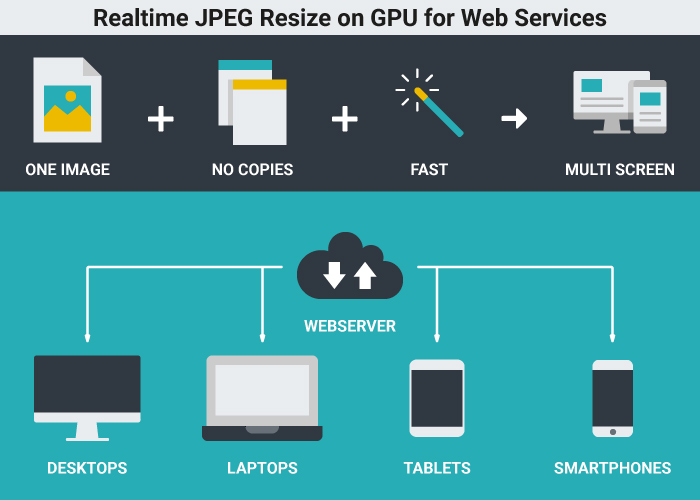

JPEG Resize on-the-flyIn various imaging applications we have to do image resize and quite often we need to resize JPEG images. In such a case the task gets more complicated, as soon as we can't do resize directly, because images are compressed. Solution is not difficult, we just need to decompress the image, then do resize and encode it to get resized image. Nevertheless, we can face some difficulties if we assume that we need to resize many millions of images every day and here comes questions concerning performance optimization. Now we need not only to get it right, we have to do that very fast. And there is a good news that it can be done this way. In the standard set of demo applications from Fastvideo SDK for NVIDIA GPUs there is a sample application for JPEG resize. It's supplied both in binaries and with source codes to let users integrate it easily into their software solutions. This is the software to solve the problem of fast resize (JPEG resize on-the-fly), which is essential for many high performance applications, including high load web services. That application can do JPEG resize very fast, and user can test the binary to check image quality and performance. If we consider high load web application as an example, we can formulate the following task: we have big database of images in JPEG format, and we need to perform fast resize for these images with minimum latency. This is also a problem for big sites with responsive design: how to prepare set of images with optimal resolutions to minimize traffic and to do that as fast as possible? At first we need to answer the question “Why JPEG?”. Modern internet services get most of such images from their users, which create them with mobile phones or cameras. For such a situation JPEG is a standard and reasonable choice. Other formats on mobile phones and cameras do exist, but they are not so widespread as JPEG. Many images are stored as WebP, but that format is still not so popular as JPEG. Moreover, encoding and decoding of WebP images are much slower in comparison with JPEG, and this is also very important. Quite often, such high load web services utilize sets of multiple image copies of the same image with different resolutions to get low latency response. That approach leads to extra expenses on storage, especially for high performance applications, web services and big image databases. The idea to implement better solution is quite simple: we can try to store just one JPEG image at the database instead of image series and to transform it to desired resolution on the fly, which means very fast and with minimum latency. How to prepare image databaseWe will store all images in the database at JPEG format, but this is not a good idea to utilize them “as is”. It’s important to prepare all images from the database for future fast decoding. That is the reason why we need to pre-process at off-line all images in the database to insert so called “JPEG restart markers” into each image. JPEG Standard allows such markers and most of JPEG decoders can easily process JPEG images with these markers without problem. Most of smart phones and cameras don’t produce JPEGs with restart markers, that’s why we can add these markers with our software. This is lossless procedure, so we don’t change image content, though file size will be slightly more after that. To make full solution efficient, we can utilize some statistics about user device resolutions which are most frequent. As soon as users utilize their phones, laptops, PCs to see pictures, and quite often these pictures need just a part of the screen, then image resolutions should not too big and this is the ground to conclude that most of images from our database could have resolutions not more than 1K or 2K. We will consider both choices to evaluate latency and performance. In the case if we need bigger resolution at user device, we just can do resize with upscaling algorithm. Still, there is a possibility to choose bigger default image resolution for the database, general solution will be the same. For practical purposes we consider JPEG compression with parameters which correspond to “visually lossless compression”. It means JPEG compression quality around 90% with subsampling 4:2:0 or 4:4:4. To evaluate time of JPEG resize, for testing we choose downscaling to 50% both for width and height. In real life we could utilize various scaling coefficients, but 50% could be considered as standard case for testing. Algorithm description for JPEG Resize on-the-fly softwareThis is full image processing pipeline for fast JPEG resize that we've implemented in our software:

We could also implement the same solution with better precision. Before resize we could apply reverse gamma to all color components of the pixel, in order to perform resize in linear space. Then we will apply that gamma to all pixels right after sharp. Visual difference is not big, though it's noticeable, computational cost for such an algorithm modification is low, so it could be easily done. We just need to add reverse and forward gamma to image processing pipeline on GPU. There is one more interesting approach to solve the same task of JPEG Resize. We can do JPEG decoding on multicore CPU with libjpeg-turbo software. Each image could be decoded in a separate CPU thread, though all the rest of image processing is done on GPU. If we have sufficient number of CPU cores, we could achieve high performance decoding on CPU, though the latency will degrade significantly. If the latency is not our priority, then that approach could be very fast as well, especially in the case when original image resolution is small. General requirements for fast jpg resizer

Full pipeline for web resize, step by step

Software parameters for JPEG Resize

Important limitations for Web ResizerWe can get very fast JPEG decoding on GPU only in the case if we have built-in restart markers in all our images. Without these restart markers one can’t make JPEG decoding parallel algorithm and we will not be able finally to get high performance at the decoding stage. That’s why we need to prepare the database with images which have sufficient number of restart markers. At the moment, as we believe, JPEG compression algorithm is the best choice for such a task because performance of JPEG Codec on GPU is much faster in comparison with any competitive formats/codecs for image compression and decompression: WebP, PNG, TIFF, JPEG2000, etc. This is not just the matter of format choice, that is the matter of available high-performance codecs for these image formats. Standard image resolution for prepared database could be 1K, 2K, 4K or anything else. Our solution will work with any image size, but total performance could be different. Performance measurements for resize of 1K and 2K jpg imagesWe’ve done testing on NVIDIA Tesla V100 (OS Windows Server 2016, 64-bit, driver 24.21.13.9826) on 24-bit images 1k_wild.ppm and 2k_wild.ppm with resolutions 1K and 2K (1280×720 and 1920×1080). Tests were done with different number of threads, running at the same GPU. To process 2K images we need around 110 MB of GPU memory per one thread, for four threads we need up to 440 MB. At the beginning we've encoded test images to JPEG with quality 90% and subsampling 4:2:0 or 4:4:4. Then we ran test application, did decoding, resizing, sharpening and encoding with the same quality and subsampling. Input JPEG images resided at system memory, we copied the processed image from GPU to the system memory as well. We measured timing for that procedure. Command line example to process 1K image: Performance for 1K images

Performance for 2K images

JPEG subsampling 4:2:0 for input image leads to slower performance, but image sizes for input and output images are less in that case. For subsampling 4:4:4 we get better performance, though image sizes are bigger. Total performance is mostly limited by JPEG decoder module and this is the key algorithm to improve to get faster solution in the future. ResumeFrom the above tests we see that on just one NVIDIA Tesla V100 GPU, resize performance could reach 1000 fps for 1K images and 900 fps for 2K images at specified test parameters for JPEG Resize. To get maximum speed, we need to run 2-4 threads on the same GPU. Latency around just one millisecond is very good result. To the best of our knowledge, one can’t get such a latency on CPU for that task and this is one more important vote for GPU-based resize of JPEG images at high performance professional solutions. To process one billion of JPEG images with 1K or 2K resolutions per day, we need up to 16 NVIDIA Tesla V100 GPUs for JPEG Resize on-the-fly task. Some of our customers have already implemented that solution at their facilities, the others are currently testing that software. Please note that GPU-based resize could be very useful not only for high load web services. There are much more high performance imaging applications where fast resize could be really important. For example, it could be utilized at the final stage of almost any image processing pipeline before image output to monitor. That software can work with any NVIDIA GPU: mobile, laptop, desktop, server. Benefits of GPU-based JPEG Resizer

To whom it may concernFast resize of JPEG images is definitely the issue for high load web services, big online stores, social networks, online photo management and sharing applications, e-commerce services and enterprise-level software. Fast resize can offer better results at less time and less cost. Software developers could benefit from GPU-based library with latency in the range of several milliseconds to resize jpg images on GPU. That solution could also be a rival to NVIDIA DALI project for fast jpg loading at training stage of Machine Learning or Deep Learning frameworks. We can offer super high performance for JPEG decoding together with resize and other image augmentation features on GPU to make that solution useful for fast data loading at CNN training. Please contact us concerning that matter if you are interested. Roadmap for jpg resize algorithm

Useful links

Update #1The latest version of the software offers 1500 fps performance on NVIDIA Tesla V100 for 1K images at the same testing conditions (without CUDA MPS). |